Performance penalty of storage virtualization

Jul 1, 2024 by Thibault Debatty | 2472 views

https://cylab.be/blog/349/performance-penalty-of-storage-virtualization

In a previous blog post, I showed how to use sysbench to benchmark a Linux system. I ran the tool on various systems I had access to, and I was staggered by the performance penalty of virtual storage: a virtual disk (vdi) is roughly 10 times slower than the actual disk it is reading from or writing to. In this blog post, I want to show the results of some additional tests that, sadly enough, will only confirm this observation…

Host Filesystem

First, I wanted to test if the filesystem used on the host had an impact on performance of the virtual drive. So, I used a SSD drive, created and formatted 3 partitions:

- ext4

- xfs

- btrfs

On each partition, I used sysbench to evaluate storage performance. Then I used VirtualBox (7.0) to create a VM with a virtual disk (VDI) and executed sysbench in the VM, with the same parameters. The results are presented below, expressed in MiB/s (higher is better).

ext4

| MiB/s | Host | Guest |

|---|---|---|

| Random read | 4536 | 47 |

| Random write | 1699 | 202 |

| Sequential read | 11511 | 978 |

| Sequential write | 3387 | 2415 |

xfs

| MiB/s | Host | Guest |

|---|---|---|

| Random read | 4381 | 41 |

| Random write | 1592 | 209 |

| Sequential read | 14372 | 923 |

| Sequential write | 4648 | 2106 |

btrfs

| MiB/s | Host | Guest |

|---|---|---|

| Random read | 4512 | 82 |

| Random write | 110 | 256 |

| Sequential read | 13708 | 1000 |

| Sequential write | 717 | 809 |

These results only confirm what I found previously:

- the performance of a virtual disk is roughly 1/10 of the performance of the host drive;

- ext4 and xfs roughly behave similarly;

- btrfs seems to be slower for write operations.

Hypervisor

For the second test, I wanted to know if the hypervisor and the format of the virtual disk has an impact on storage performance. Indeed, by default:

- VirtualBox uses Virtual Disk Image (VDI) to store a virtual drive,

- QEMU uses QEMU Copy On Write (QCOW2) and

- VMware uses Virtual Machine Disk (VMDK).

These different formats may induce different storage performance for the guest VM. So I created a single host partition, formatted as ext4, and created one VM using VirtualBox, one using QEMU and one using VMware Workstation. In each VM, I ran the sysbench tests. The results are presented in the table below, together with the results from running the tests directly on the host.

| MiB/s | Host | VirtualBox (VDI) | QEMU (QCOW2) | VMware Workstation (VMDK) |

|---|---|---|---|---|

| Random read | 4536 | 47 | 217 | 299 |

| Random write | 1699 | 202 | 708 | 400 |

| Sequential read | 11511 | 978 | 4152 | 1156 |

| Sequential write | 3387 | 2415 | 1025 | 3534 |

The results between the different hypervisors are quite mixed, but globally, all virtual drives are largely slower than the host drive.

The sequential write performance of VMware (VMDK) seems better than the 2 other hypervisors (even better than the host FS). But this is probably due to host cache, which is enabled by default in VMware Workstation, while it is disabled by default in VirtualBox and QEMU to avoid a huge data loss in case of power outage or host crash.

Block and LVM pools

To avoid the potential performance penalty of the host filesystem, I used QEMU to create virtual disks with ‘almost’ direct access to the hardware: one using a block device and one using a LVM group.

For the block device, I created a dos partition table on my device, then in Virtual Manager I created a storage pool of type ‘Pre-formatted Block Devic’. I created a VM with a virtual disk from this pool, and executed sysbench tests.

For LVM, I created a LVM Group on my device:

sudo pvcreate /dev/sda

sudo vgcreate vg_sda /dev/sda

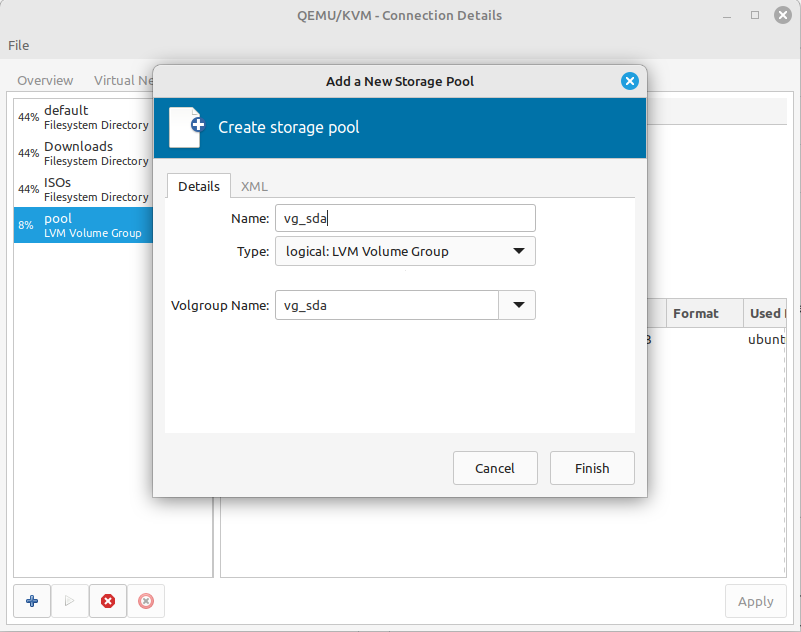

Then I used Virtual Manager to create a QEMU pool:

I created a VM with virtual drive stored in the LVM group. Once again, I ran sysbench and got the following results:

| MiB/s | Host | LVM | Block |

|---|---|---|---|

| Random read | 4536 | 29 | 29 |

| Random write | 1699 | 195 | 23 |

| Sequential read | 11511 | 480 | 469 |

| Sequential write | 3387 | 387 | 391 |

This time, the results were even worse than with classical (filesystem based) storage pools.

Conclusion

Well, it’s pretty deceiving: virtual disks are almost 10 times slower than host drives. Moreover, using QEMU, block or LVM pools don’t help!

There are other configurations that I did not test in this post like iSCSI, GlusterFS and raw disk image. This will probably be the subject of a follow-up…

This blog post is licensed under

CC BY-SA 4.0