Laravel database transactions & mysqldump

Oct 27, 2021 by Zacharia Mansouri | 5496 views

https://cylab.be/blog/184/laravel-database-transactions-mysqldump

Did you know that mysqldump can create inconsistent backups if you do not use database transactions in Laravel? Let’s discover that issue and address it in order to avoid it. After setting up and running locally a Laravel project that will serve as a demo for that specific issue, we will observe the necessity of using transactions and how to implement them. We’ll also discover many more tips while trying to understand what happens under the MySQL hood.

This blogpost assumes that you already know the basics of Laravel and already installed composer and MySQL.

Setting up the Laravel project

Let’s create a project called transaction.

composer create-project laravel/laravel transaction

Let’s then create a model called Test:

php artisan make:model Test -m

Here is how the model is defined for Laravel in app/Models/Test.php:

<?php

namespace AppModels;

use IlluminateDatabaseEloquentFactoriesHasFactory;

use IlluminateDatabaseEloquentModel;

class Test extends Model

{

use HasFactory;

/**

* The attributes that are mass assignable.

* @var array

*/

protected $fillable = [

'name'

];

}

Let’s also update its associated migration, defined in database/migrations/xxxx_xx_xx_xxxxxx_create_tests_table.php with the following code content:

<?php

use IlluminateDatabaseMigrationsMigration;

use IlluminateDatabaseSchemaBlueprint;

use IlluminateSupportFacadesSchema;

class CreateTestsTable extends Migration

{

/**

* Run the migrations.

* @return void

*/

public function up()

{

Schema::create('tests', function (Blueprint $table) {

$table->id();

$table->string('name');

$table->timestamps();

});

}

/**

* Reverse the migrations.

* @return void

*/

public function down()

{

Schema::dropIfExists('tests');

}

}

We also need a controller for creating and deleting the Test instances. Let’s create it:

php artisan make:controller TestController

And let’s define the create and delete functions inside that controller:

<?php

namespace AppHttpControllers;

use IlluminateHttpRequest;

use IlluminateSupportFacadesDB;

use AppModelsTest;

class TestController extends Controller

{

/**

* The number of tests to create/update.

* @var int

*/

private $nbTests;

/**

* Create a new controller instance.

* @return void

*/

public function __construct()

{

$this->nbTests = 1000;

}

/**

* Create Test instances.

* @param Request $request, the request.

* @return IlluminateDatabaseEloquentCollection<Test> the Test instances.

*/

public function create(Request $request)

{

if (Test::count() > 0) {

return response()->json([

'message' => 'Tests already created'

], 409);

}

for ($i = 1; $i <= $this->nbTests; $i++) {

Test::create([

'name' => 'test' . $i

]);

}

return Test::all();

}

/**

* Delete the Test instances.

* @param Request $request, the request.

* @return IlluminateDatabaseEloquentCollection<Test> the Test instances.

*/

public function delete(Request $request)

{

Test::truncate();

return Test::all();

}

}

Use the delete function when you want to delete the Test instances since it will also reset the tests table id to 1. These ids will be used when we will add the update function that retrieves objects by their ids.

Here are the routes linked to those functions in routes/web.php:

<?php

use IlluminateSupportFacadesRoute;

use AppHttpControllersTestController;

Route::get('/create', [TestController::class, 'create']);

Route::get('/delete', [TestController::class, 'delete']);

Creating the database

Let’s quickly create a database with the following data:

- database name: transaction

- user name: transaction

- user password: ::::1234SuperPass

sudo mysql

mysql> CREATE DATABASE transaction

mysql> USE transaction;

mysql> CREATE USER 'transaction'@'localhost' IDENTIFIED BY '::::1234SuperPass';

mysql> GRANT ALL PRIVILEGES ON transaction.* TO 'transaction'@'localhost';

mysql> GRANT PROCESS ON *.* TO 'transaction'@'localhost'; #optional, see explanation hereunder

mysql> FLUSH PRIVILEGES;

PROCESS is a global privilege that cannot be granted for individual databases and that gives access to information about threads executing within the MySQL server, therefore allowing a user with that global privilege to be able to read every query from any user.

The mysqldump command has an option called –no-tablespaces that allows you to avoid having to grant the PROCESS privilege to a user for the whole database. Note that tablespaces are files stored in the host file system that hold data for tables and indexes. The information about those files is in a table that requires the PROCESS privilege to be read. The tablespaces are not needed in routine backups, so we can safely use the –no-tablespaces option when using mysqldump.

Dumping the database is achieved using the following command:

mysqldump --user="transaction" --password="::::1234SuperPass" --host="localhost" --no-tablespaces transaction

Migrating the Laravel tables to MySQL

All what is left to do is to create the tables needed by Laravel. Before that, Laravel needs to know how to access the database in which it will create the tables, so let’s change some .env values:

DB_CONNECTION=mysql

DB_HOST=127.0.0.1

DB_PORT=3306

DB_DATABASE=transaction

DB_USERNAME=transaction

DB_PASSWORD=::::1234SuperPass

Now the tables can be created:

php artisan migrate

Of course, you need to start your Laravel server:

php artisan serve

Mass updating

Let’s create a bunch of Test instances. The TestController has a private attribute $nbTests that defines the number of instances to create. Its default value is 1000 and we’ll see afterwards if it needs to be increased. To create the instances, just open you browser and navigate to http://localhost:8000/create. It should take some time before creating all the instances. If you receive a timeout error, just go to http://localhost:8000/delete, decrease the value of $nbTests in the TestController and go back to http://localhost:8000/create.

Now, let’s add an update function in the TestController. The update will change the name of all the Test instances.

/**

* Update the Test instances.

* @param Request $request, the request.

* @return IlluminateDatabaseEloquentCollection<Test> the Test instances.

*/

public function update(Request $request)

{

if (Test::count() == 0) {

return response()->json([

'message' => 'Tests must be first created'

], 409);

}

error_log('Starting update...');

$init = time();

for ($i = 1; $i <= $this->nbTests; $i++) {

$test = Test::find($i);

if (str_starts_with($test->name, 't')) {

$test->name = 'quest' . $i;

} else {

$test->name = 'test' . $i;

}

$test->save();

}

error_log('Updated. Took ' . (time() - $init) . ' seconds.');

return Test::all();

}

The associated route in web.php:

Route::get('/update', [TestController::class, 'update']);

What we want is to avoid database dumps to have two rows of Test instances that do not start with the same name (they all start with ‘test’ or they all start with ‘quest’. We will assume that the logic of a dump is not consistent regarding our project whenever that rule is not respected.

In order to have the time to launch a mass update and then dump the database, ensure that the $nbTests in the TestController is big enough to make the mass update take at least 20 seconds.

Now, just go to http://localhost:8000/update to launch the mass update, and then directly launch the mysqldump command in a terminal:

mysqldump --user="transaction" --password="::::1234SuperPass" --host="localhost" --no-tablespaces transaction

What you should get is a dump with some Test instances whose name start with ‘test’ while other start with ‘quest’… which reveals an inconsistency issue! Let’s see how to address it in the next section.

Laravel database transactions

A transaction is a closure that will run a set of operations (e.g. updates) in the database and automatically commit those operations only once when it finishes and if no error occurred. Otherwise, all the operations are rolled back and the database does not change. A transaction locks the tables it will write to avoid concurrent writes that could lead to consistency errors. The committing and locking aspects of a transaction are what make possible for a set of operations to be treated as an atomic operation.

Laravel gives you many ways to use transactions. The most simple one being:

use IlluminateSupportFacadesDB;

use AppModelsTest;

DB::transaction(function () {

Test::create([

'name' => 'testA',

]):

Test::create([

'name' => 'testB',

]):

});

It is possible to retrieve the data from a transaction:

use IlluminateSupportFacadesDB;

use AppModelsTest;

$tests = DB::transaction(function () {

$tests = [];

array_push($tests, Test::create([

'name' => 'testA',

])):

array_push($tests, Test::create([

'name' => 'testB',

])):

return $tests

});

There is also a way to tell how many times the transaction should retry when it encounters a deadlock (due to another query locking the same resource in the database). That number of times is specified as the second argument of the transaction function:

use IlluminateSupportFacadesDB;

use AppModelsTest;

DB::transaction(function () {

Test::create([

'name' => 'testA',

]):

Test::create([

'name' => 'testB',

]):

}, 5);

Finally, it is possible to manually manage the transactions:

use IlluminateSupportFacadesDB;

DB::beginTransaction();

// if an error occured, rollback and close the transaction

DB::rollBack();

// if everything went well, commit and close the transaction

DB::commit();

Let’s now apply the concept of transaction to our mass update in TestController by adding a new function called updateWithTransaction:

/**

* Update the Test instances.

* @param Request $request, the request.

* @return IlluminateDatabaseEloquentCollection<Test> the Test instances.

*/

public function updateWithTransaction(Request $request)

{

if (Test::count() == 0) {

return response()->json([

'message' => 'Tests must be first created'

], 409);

}

DB::transaction(function () {

error_log('Starting update...');

$init = time();

for ($i = 1; $i <= $this->nbTests; $i++) {

$test = Test::find($i);

if (str_starts_with($test->name, 't')) {

$test->name = 'quest' . $i;

} else {

$test->name = 'test' . $i;

}

$test->save();

}

error_log('Updated. Took ' . (time() - $init) . ' seconds.');

});

return Test::all();

}

The associated route in web.php:

Route::get('/updatetransaction', [TestController::class, 'updateWithTransaction']);

As you can realize, the transaction takes less time to update table rows since a transaction does not commit after each row update but only once after having updated all the rows.

Let’s do again our previous experience by going to http://localhost:8000/updatetransaction to launch the mass update (with a transaction), and then directly launch the mysqldump command:

mysqldump --user="transaction" --password="::::1234SuperPass" --host="localhost" --no-tablespaces transaction

Now you should observe that the mysqldump process waits for the mass update to finish before dumping the content of the tests table. Using a Laravel transaction is therefore needed to ensure the consistency of our database backups.

Going further

Still interested in how mysqldump and a Laravel transaction interact? Then you should also read this section.

For curiosity purposes, let’s try another option of mysqldump that is called –single-transaction. That option will make mysqldump read the whole database at the time of its launch, without locking anything since that option disables what is called a global read lock. Without the single transaction option, that lock would ensure that the current mysqldump session is not able to write anything. It puts the database in read-only mode for that session. However, any other session trying to write in the database will be put to a waiting state until the global read lock is removed. The single transaction option thus dumps the consistent state of the database at the time when START TRANSACTION was issued without blocking any applications (cf. the man page of mysqldump).

Here is the full mysqldump command when adding that option:

mysqldump --user="transaction" --password="::::1234SuperPass" --host="localhost" --no-tablespaces --single-transaction transaction

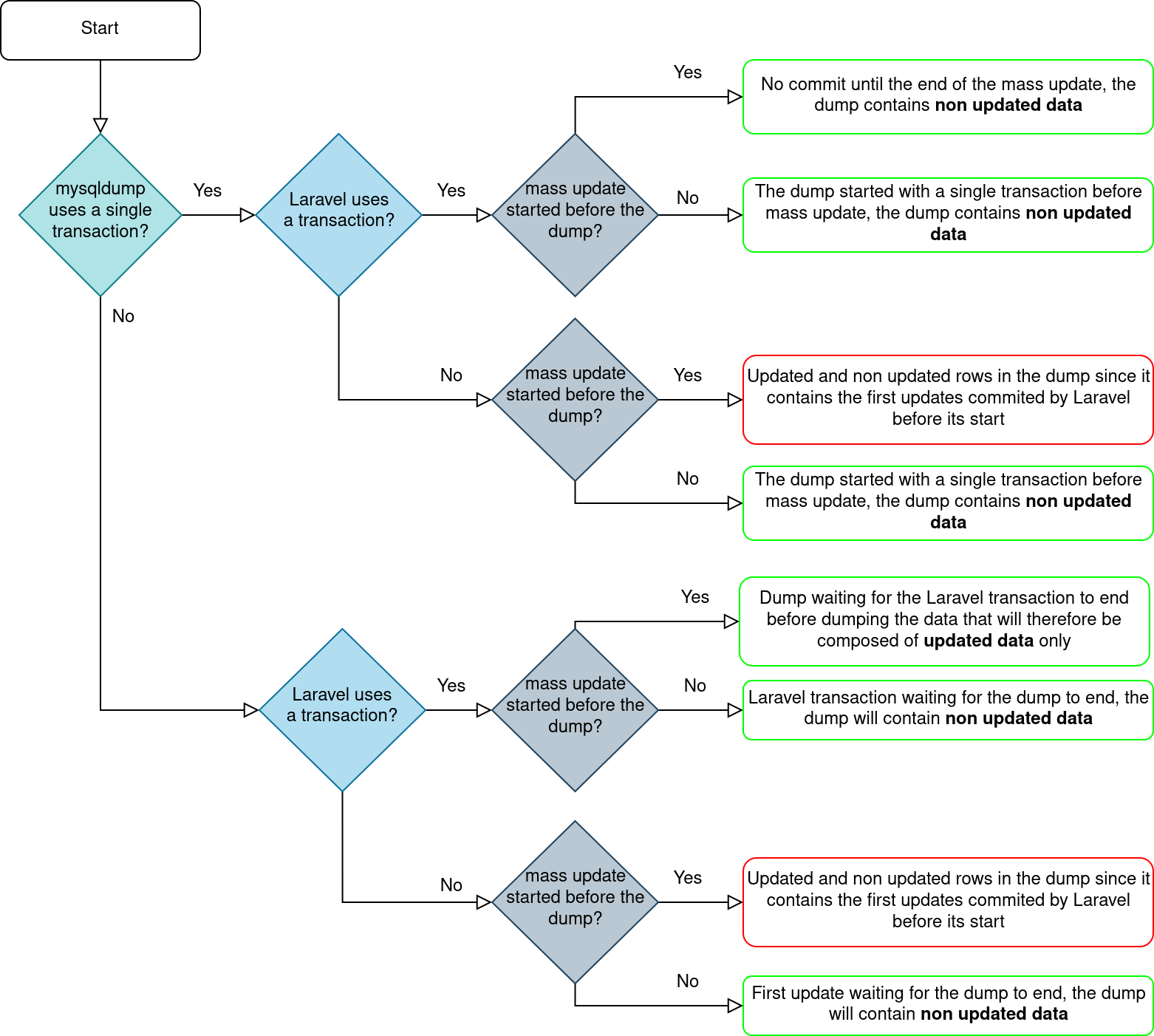

Let’s combine the concepts we acquired through that blogpost and see what happens when we vary the following parameters:

- using or not the mysqldump –single-transaction option;

- using or not a Laravel transaction:

- starting the mass update before or after the dump.

For the following diagram, we consider that, during a certain timespan, the mass update and the dump will run concurrently. We also consider that the dump is much faster than the mass update and that one of the two is always launched right after the other.

We see that there are two safe options. Both of them require to use a Laravel transaction. Their difference lies in the use of the single transaction as a mysqldump option:

- if it is used, then the dump will not contain any updated data;

- otherwise, the dump will contain updated or non updated data depending on which process starts first.

Those two options will be checked for more specific cases, where the starting time and the duration of both processes will vary:

- changing the starting time of each process is just a matter of making a request to the Laravel server before or after launching the mysqldump command;

- changing the process duration of the Laravel transaction requires to change the value of $nbTests in the TestController (the bigger it is, the longer the process);

- changing the process duration of the dump requires to add more instances in the tests table, e.g with a new function in the TestController (the more instances, the longer the process).

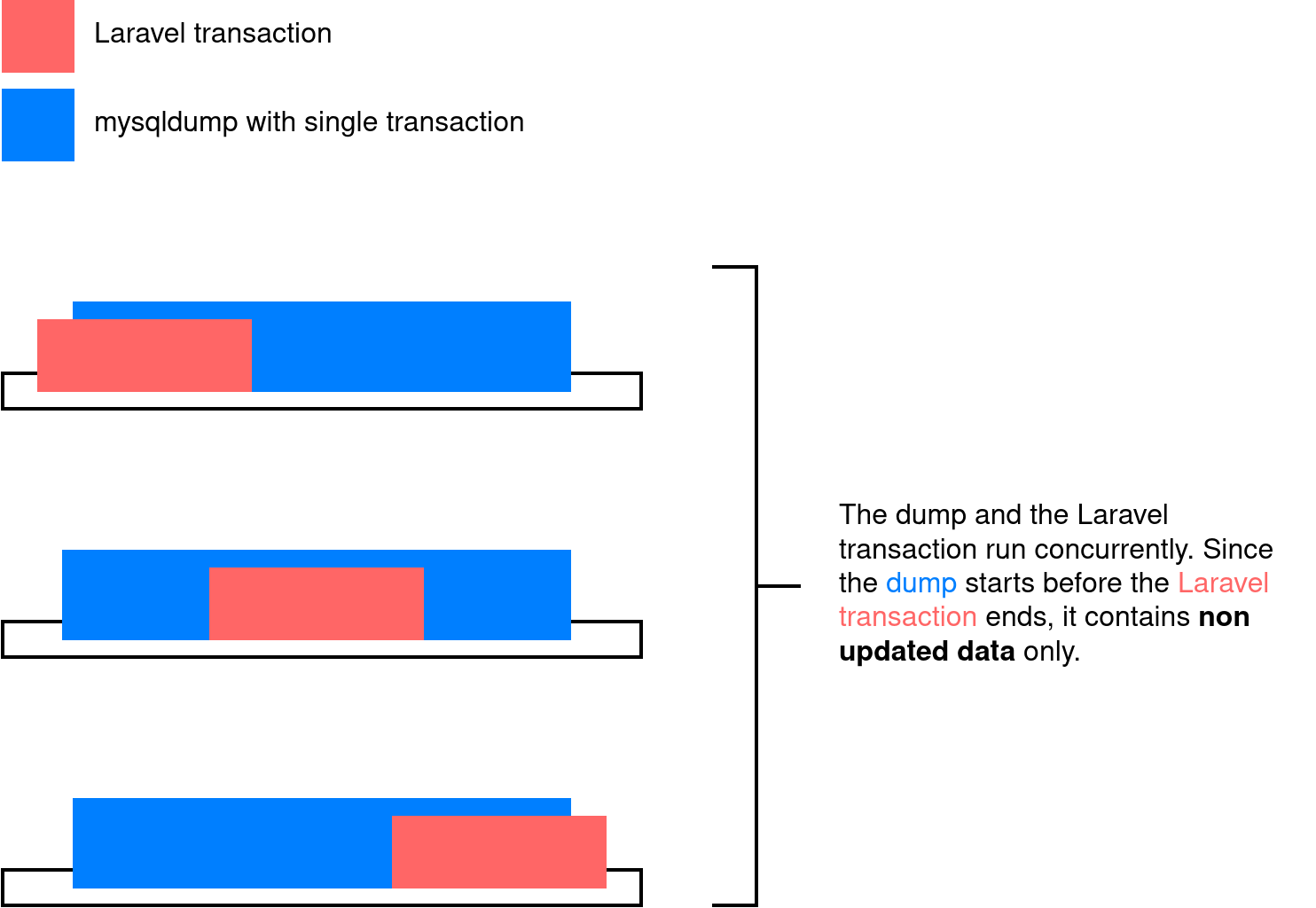

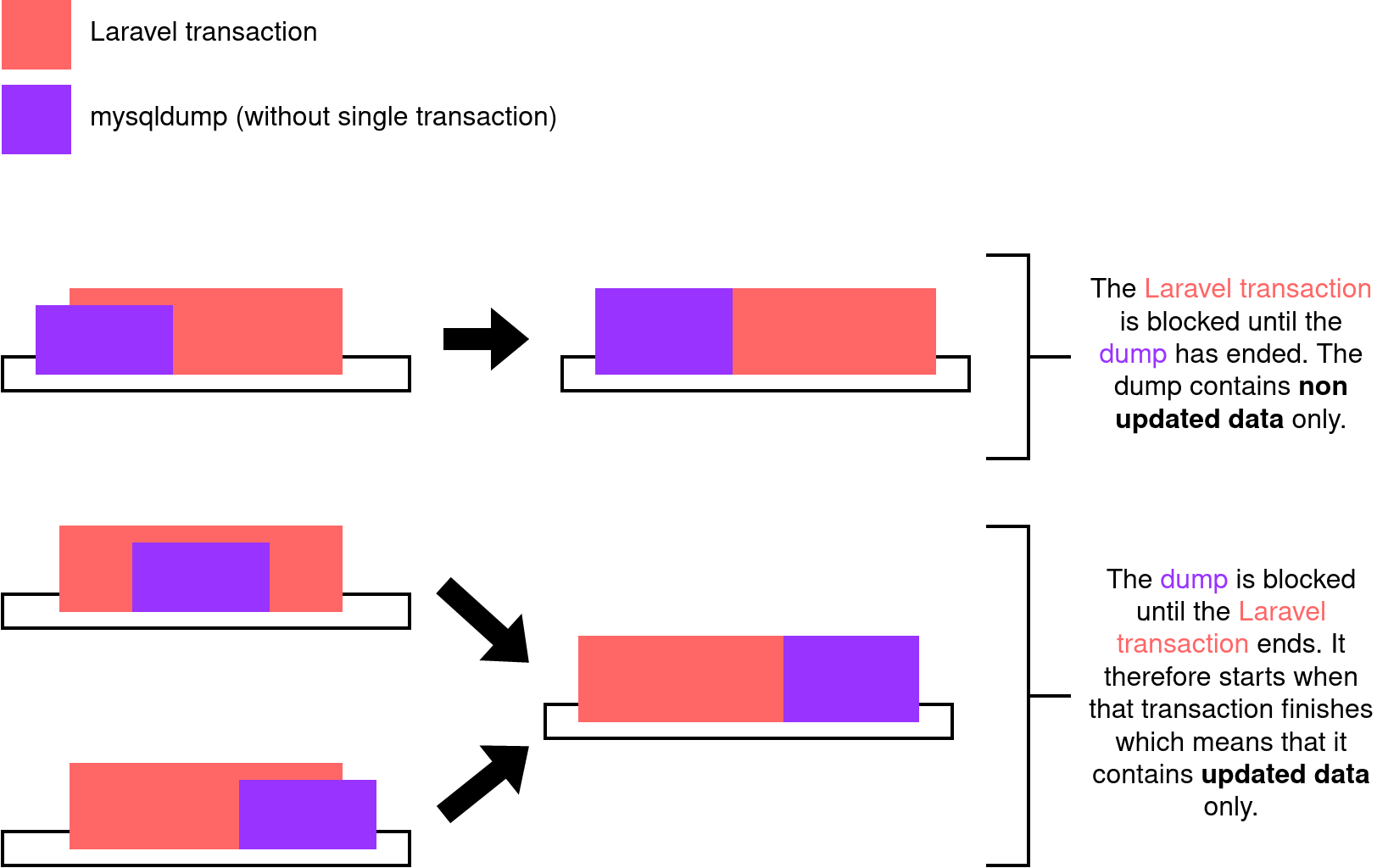

In all the following diagrams, each process is linked to a colored rectangle whose left side represents its starting time and its width represents its duration.

Let’s now dig the first option, using both Laravel transaction and mysqldump single transaction.

If the Laravel transaction takes more time than the dump:

Here we observe that both processes run concurrently and the dump contains non updated data only.

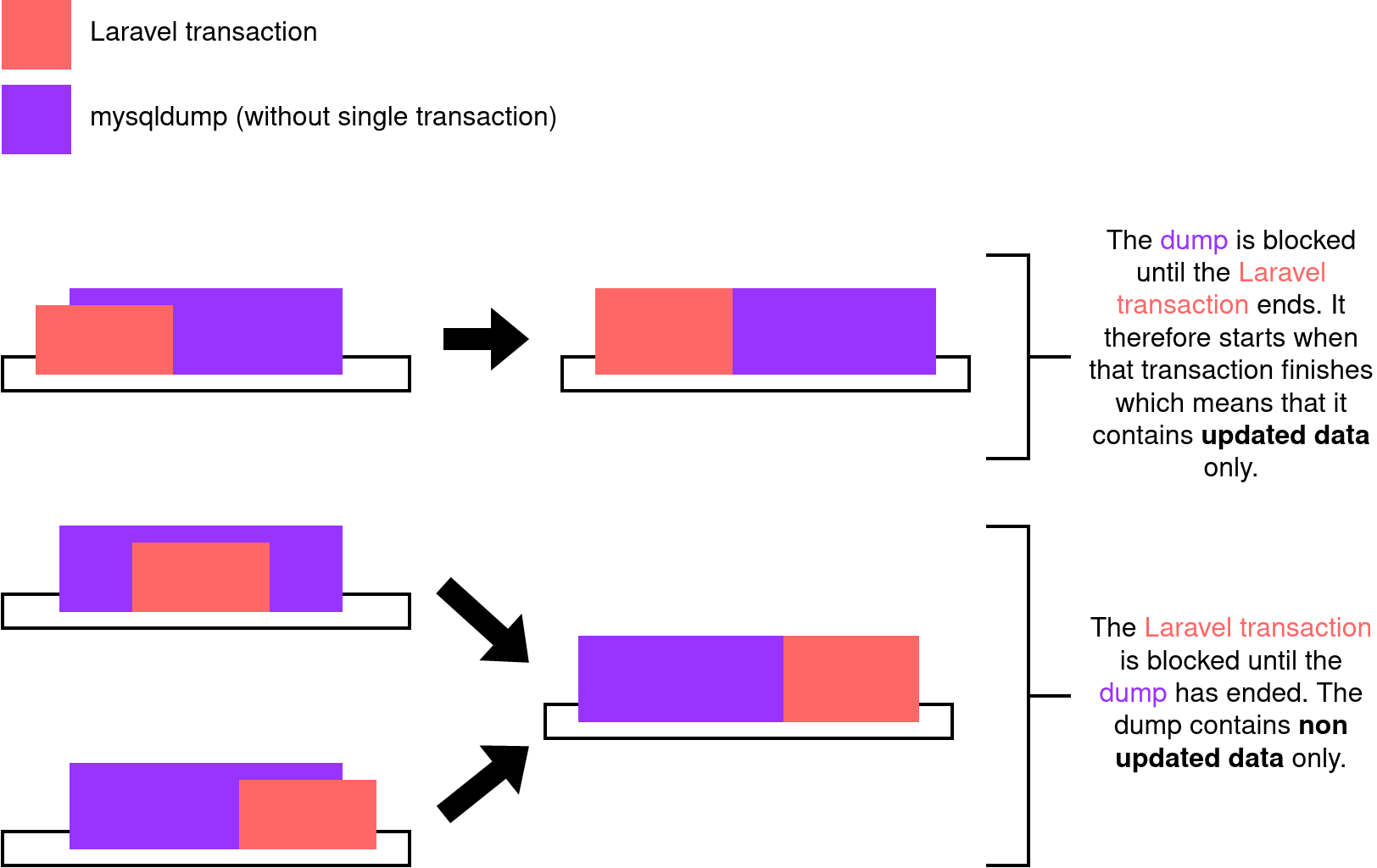

Let’s then dig the second option, using Laravel transaction and mysqldump without the single transaction option.

If the Laravel transaction takes more time than the dump:

Otherwise:

We thus observe that:

- if the dump starts before the Laravel transaction, then the transaction is blocked until the dump has ended, since a global read lock is used, and that dump contains non updated data only;

- if the dump starts after the Laravel transaction, that dump is blocked until the Laravel transaction ends, it therefore starts when that transaction finishes which means that it contains updated data only.

Whilst the consistency is ensured regardless of whether mysqldump uses a single transaction or not, using it avoids blocking other processes trying to write in the database while always dumping non updated data, no matter when the concurrent processes started or how long their duration is. The man page of mysqldump also insists on the fact that the single transaction option ensures dumping the consistent state of the database.

The best solution to ensure the consistency of a database dump is therefore to use Laravel transactions for data that should be created/updated in a dependent manner, along using the mysqldump single transaction option for that dump.

Sources

- Laravel DB transactions:

- MySQL PROCESS:

- Single transaction option (+ read lock) for mysqldump:

- PROCESS vs tablespaces:

This blog post is licensed under

CC BY-SA 4.0