Integrate a Large Language Model (LLM) in your PHP application

Jan 31, 2025 by Thibault Debatty | 4956 views

https://cylab.be/blog/391/integrate-a-large-language-model-llm-in-your-php-application

Large language models (LLMs) have been a real technological leap forward, enabling the creation of intelligent chatbots, coding assistants and much more. However, integrating these powerful models into your applications can be a daunting task. In this post, we’ll explore how to create a simple PHP Large Language Model assistant.

For this example I’ll use the LLM’s proposed by Scaleway. The feature is currently in beta which means you can try it for free!

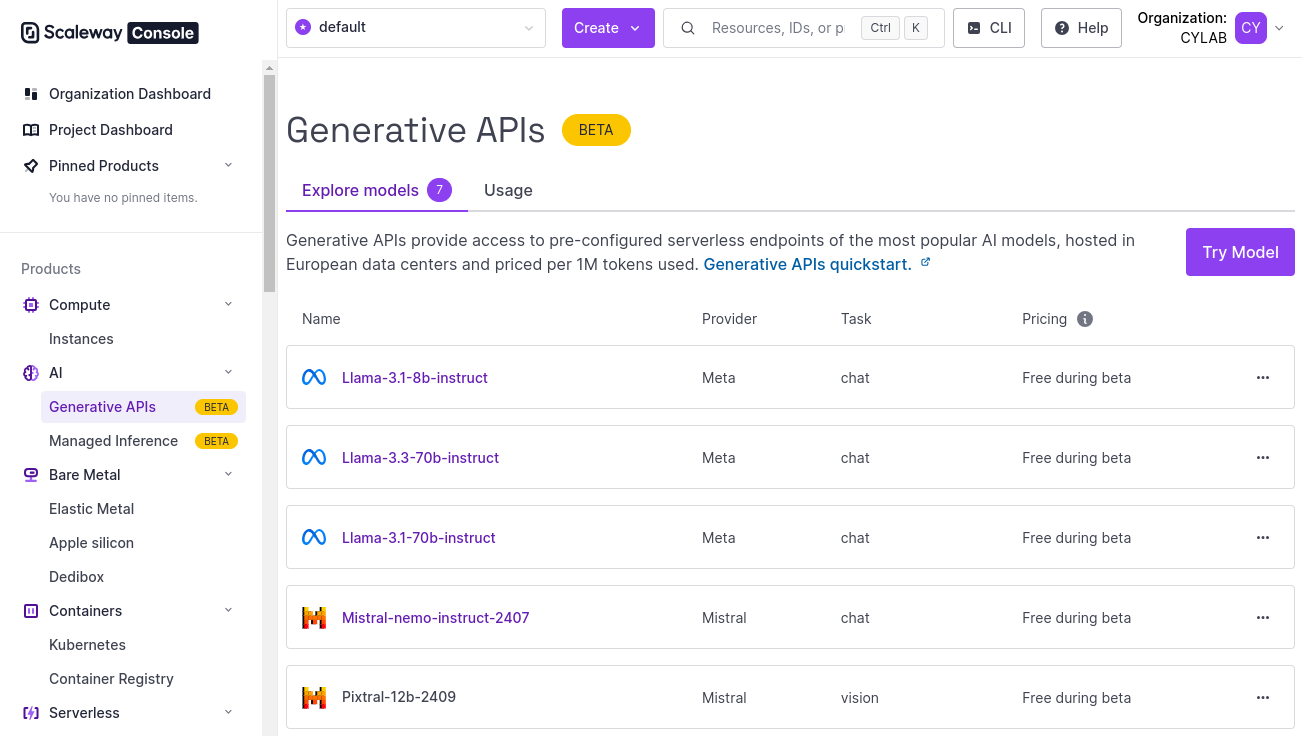

- Head to https://scaleway.com and create an account (or sign in)

- In the menu on the left, select Generative APIs

- Here you can see available models, and test them from the Scaleway Console

Create an application API Key

To run your PHP code, you’ll need an API key. There are 2 ways to do this:

- Create a personal key, which has same access rights as you, which means if the key is stolen it will give the owner full control over your scaleway account, or

- Create an application key with limited access rights

For this example I’ll show how to create an application API key with limited access rights. It takes 3 main steps:

- Create an application

- Create a policy for the application

- Create the access key for the application

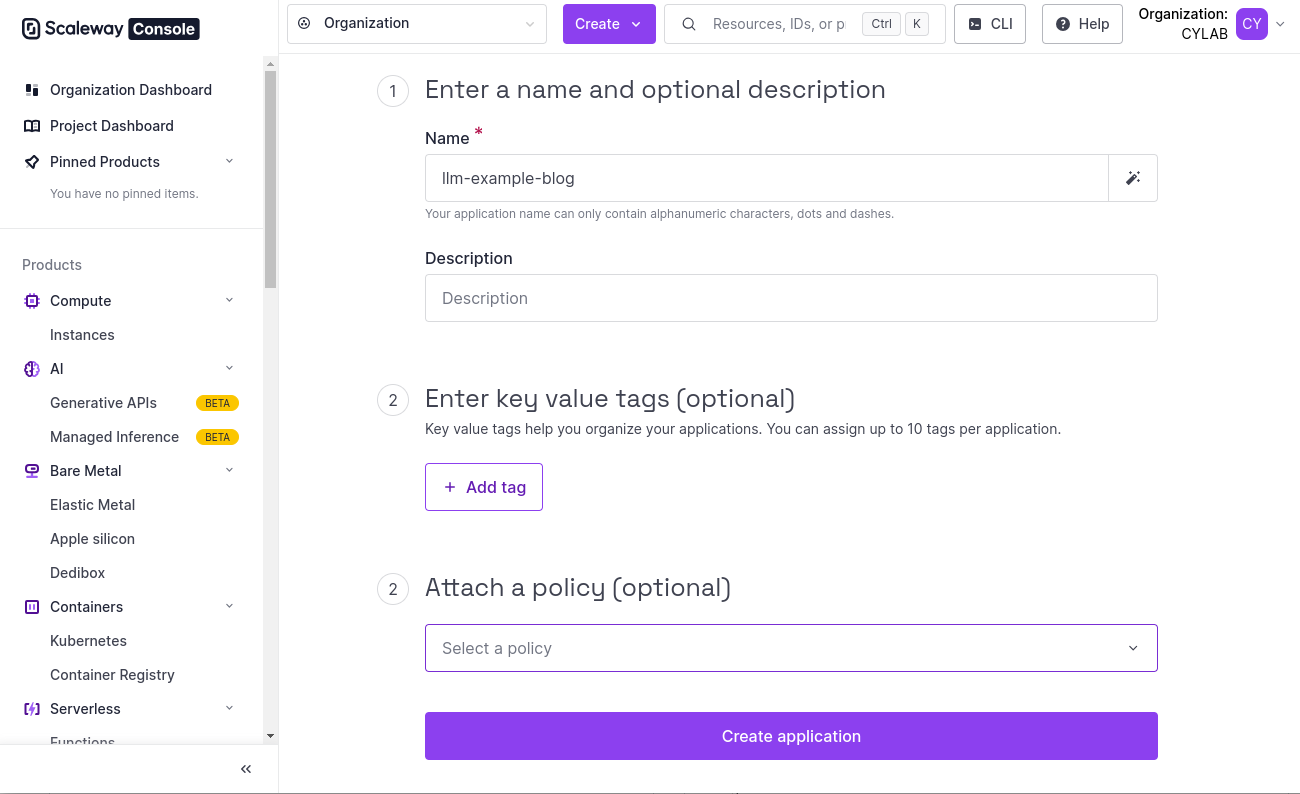

1. Create an application

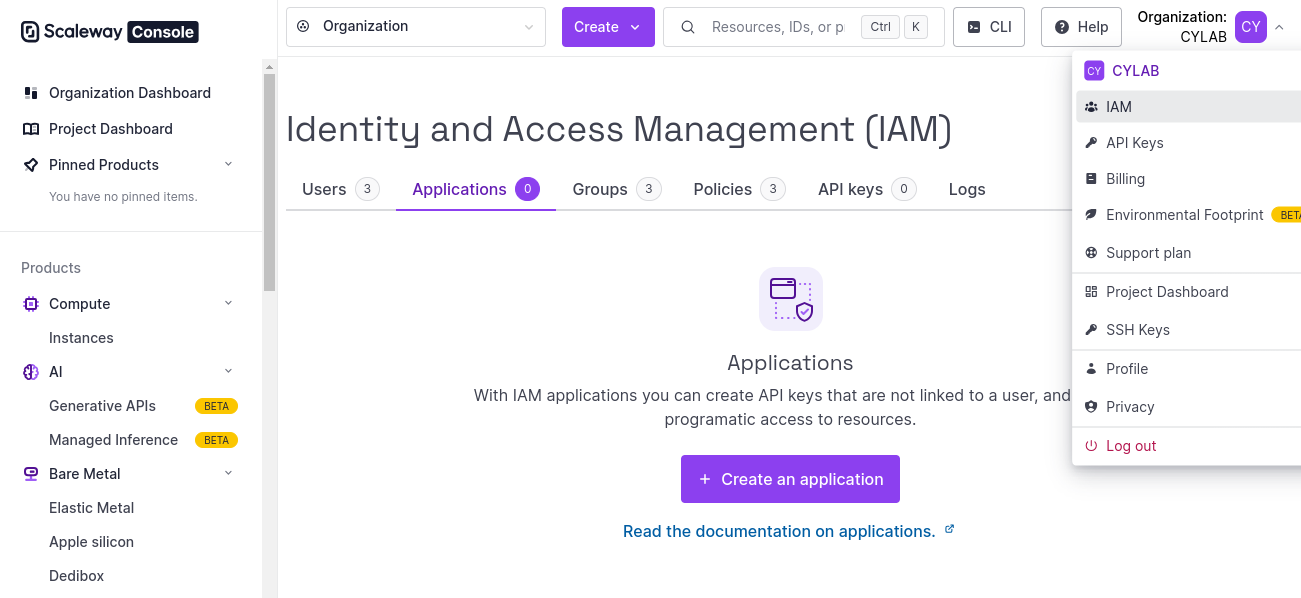

- In the Organization menu (in the top right corner), select IAM (Identity and Access Management) then

- Open the Applications tab

- Create a new application. Only the name is required, you can leave all other fields empty

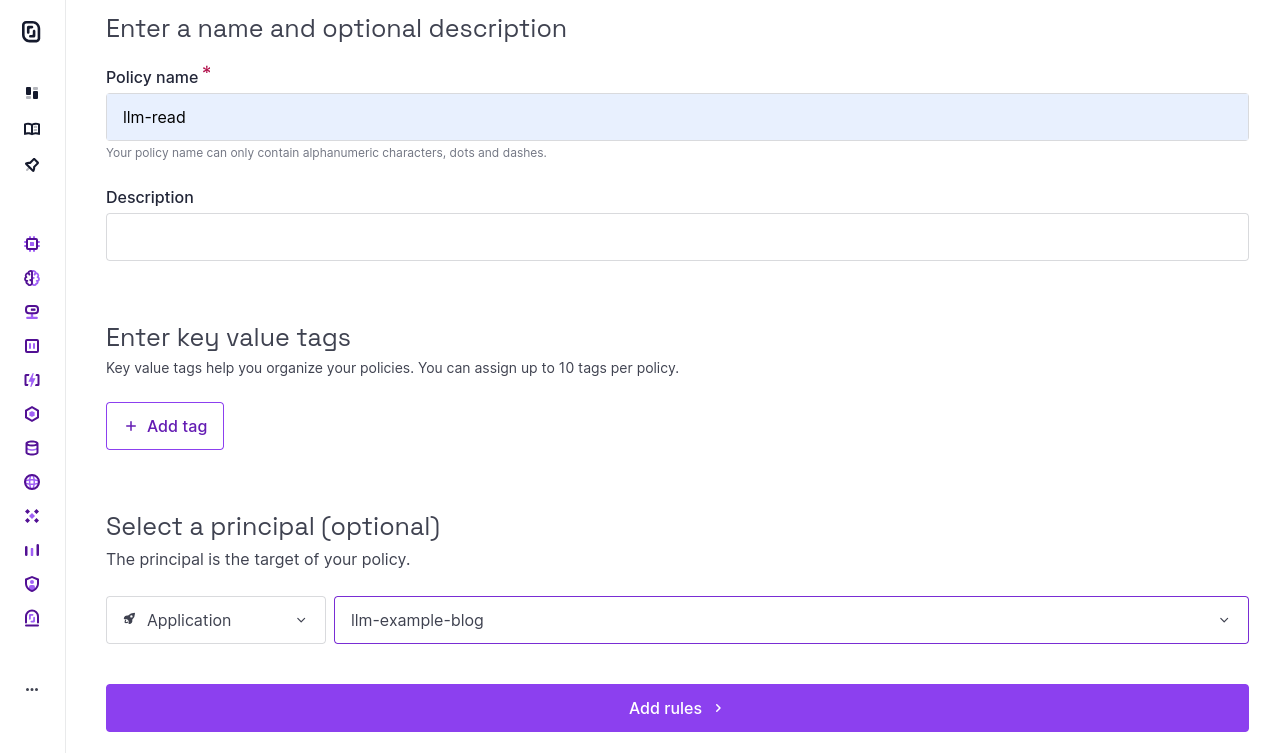

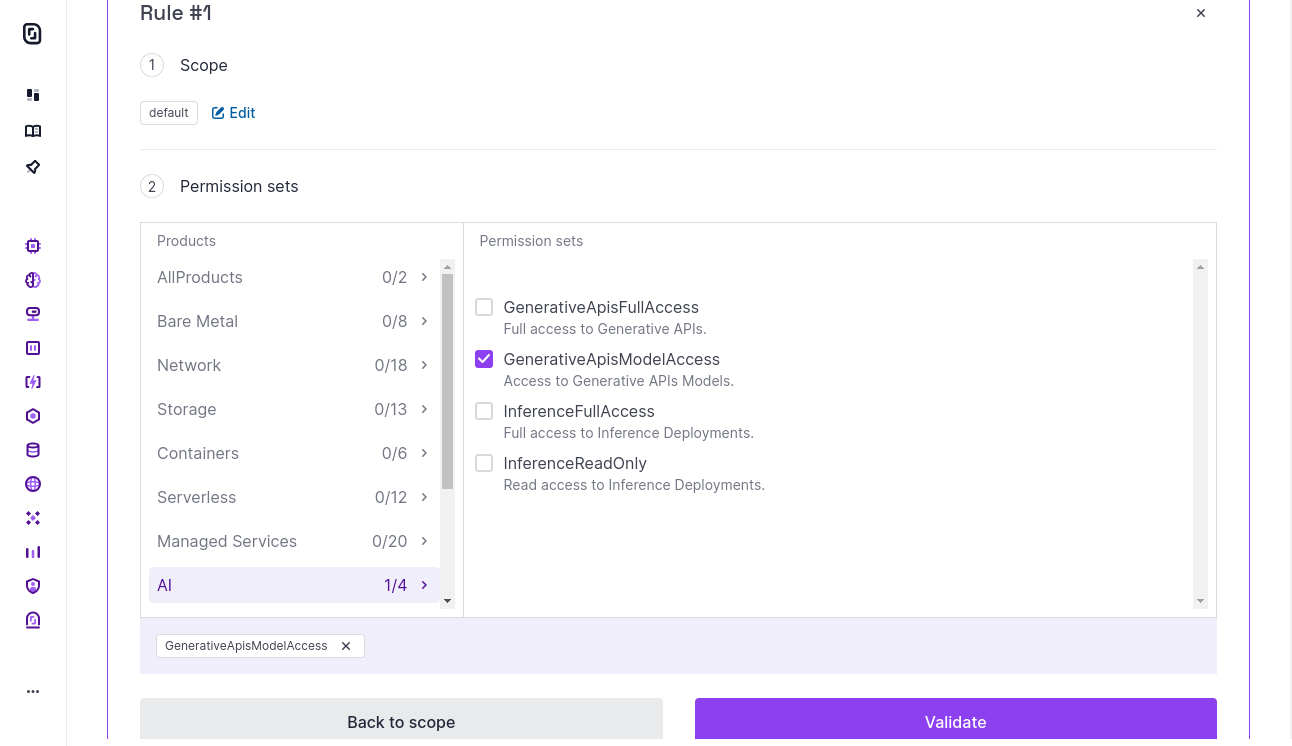

2. Create a policy for the application

- Open the Policies tab and create a new policy.

- Choose an appropriate name, in the field ‘Select a principal’ choose ‘Application’ and select the application you just created, and click on ‘Add rules’

- In the rule scope, select ‘Access to resources’ and click on ‘Validate’

- In the list of permissions select

AI>GenerativeApisModelAccessand click on ‘Validate’

- Click on ‘Create policy’

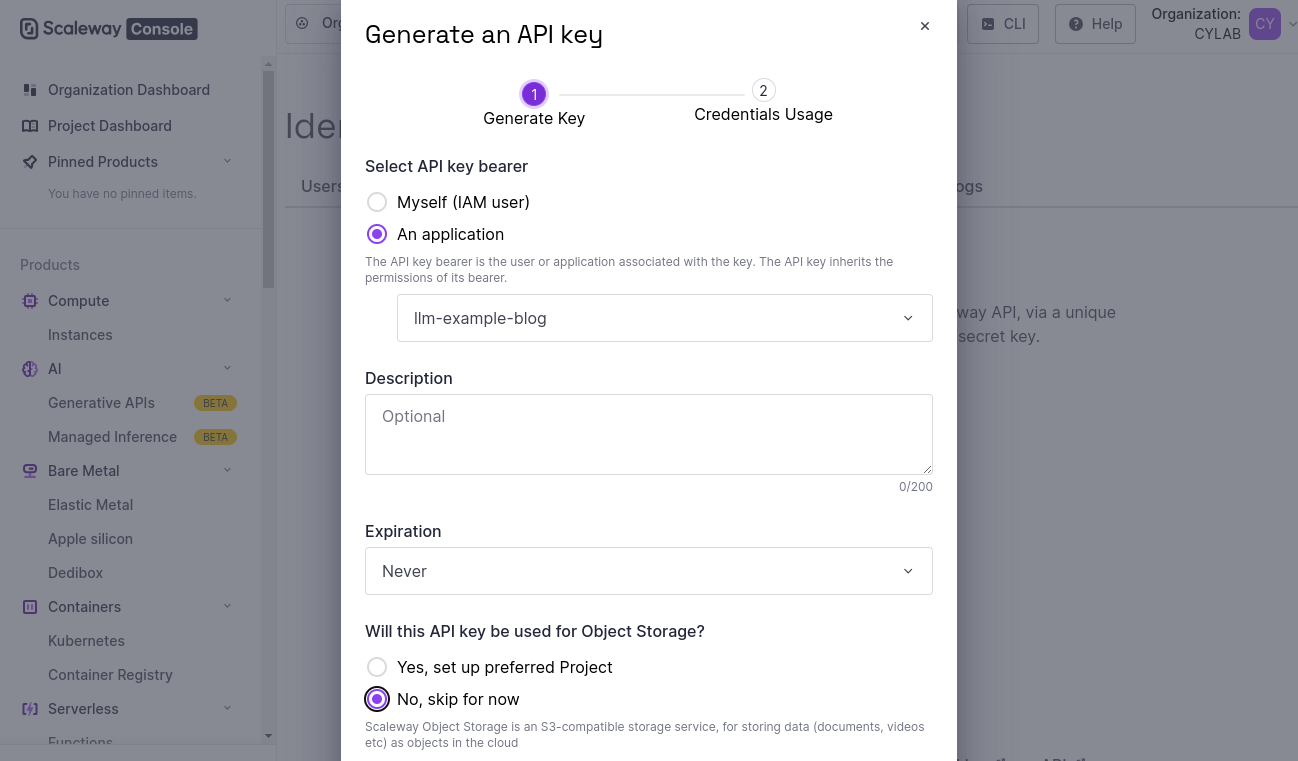

3. Create the access key for the application

- Go back to the Identity and Access Management (IAM) dashboard

- Open the tab API keys and click on ‘Generate an API key’

- In the Bearer field, select your application, then click on ‘Generate API key’.

- Copy and store your secret key. It will only be shown once!

Simple PHP LLM assistant

The API exposed by Scaleway is compatible with OpenAI API, so we can simply use openai-php/client to use the models.

composer require openai-php/client

Create a file src/bot.php with the following content:

<?php

require __DIR__ . "/../vendor/autoload.php";

// fill with your private api key

$key = "fill-me-with-your-private-key";

// base URI for scaleway

$base_uri = "https://api.scaleway.ai/v1";

// pay attention that the model name must be lowercased!

$model = "llama-3.1-8b-instruct";

$client = OpenAI::factory()

->withApiKey($key)

->withBaseUri($base_uri)

->make();

$result = $client->chat()->create([

'model' => $model,

'messages' => [

['role' => 'user', 'content' => 'Hello!'],

],

]);

echo $result->choices[0]->message->content . "\n";

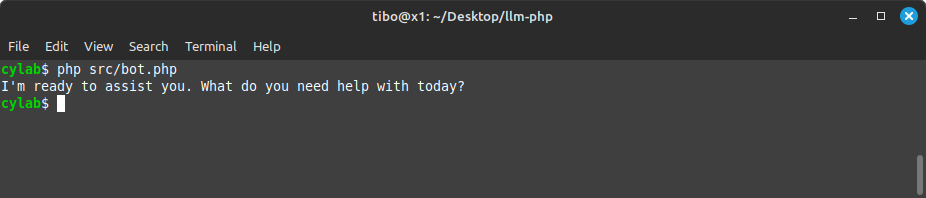

Now you can run the application with:

php src/bot.php

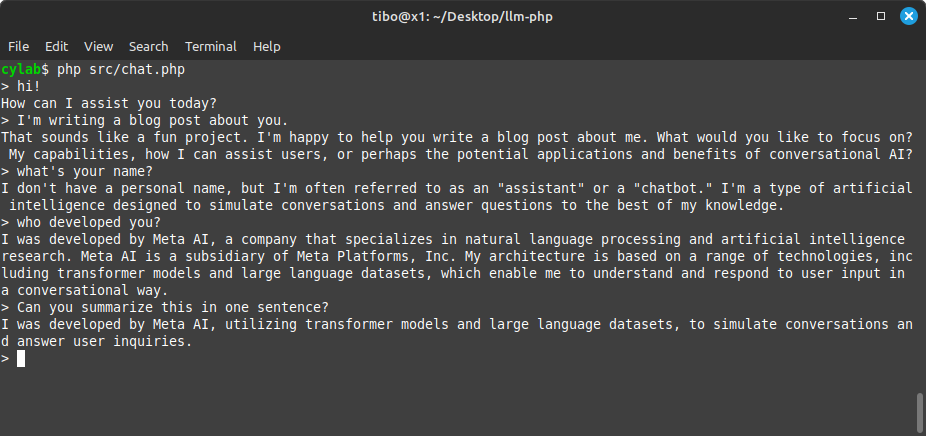

A simple chat bot

Large language models are actually stateless systems, which means they don’t keep track of a chat history or ‘remember’ what you asked or told them on the previous queries. This is a mandatory feature to build a chat system, which means this must be implemented in the client software.

Here is a simple PHP example:

<?php

require __DIR__ . "/../vendor/autoload.php";

// fill with your private api key

$key = "fill-me-with-your-private-key";

// base URI for scaleway

$base_uri = "https://api.scaleway.ai/v1";

// pay attention that the model name must be lowercased!

$model = "llama-3.1-8b-instruct";

$client = OpenAI::factory()

->withApiKey($key)

->withBaseUri($base_uri)

->make();

// keep history of chat

$messages = [

// always better to start with a "system" message to define the context

['role' => 'system', 'content' => 'You are a helpful assistant']

];

while (true) {

// ask input from user

$query = readline("> ");

// append to local chat history

$messages[] = ['role' => 'user', 'content' => $query];

$result = $client->chat()->create([

'model' => $model,

'temperature' => 0.8,

'messages' => $messages,

]);

echo $result->choices[0]->message->content . "\n";

// append LLM response to chat history

$messages[] = ['role' => 'assistant', 'content' => $result->choices[0]->message->content];

}

As you can see from the example, at each user input, the prompt and the response from the model are appended to the chat history, which allows to implement the ‘chat’ feature.

Going further

The response from the LLM actually contains a lot more information, which could be useful. The complete response looks like this:

{

["id"]=> string(41) "chatcmpl-75212ccb605642d6b25dd05db3efbe75"

["object"]=> string(15) "chat.completion"

["created"]=> int(1738341935)

["model"]=> string(30) "meta/llama-3.1-8b-instruct:fp8"

["systemFingerprint"]=> NULL

["choices"]=> array(1) {

[0]=> {

["index"]=> int(0)

["message"]=> {

["role"]=> string(9) "assistant"

["content"]=> string(91) "How can I help you?"

["toolCalls"]=> array(0) { }

["functionCall"]=> NULL

}

["finishReason"]=> string(4) "stop"

}

}

["usage"]=> {

["promptTokens"]=> int(99)

["completionTokens"]=> int(25)

["totalTokens"]=> int(124)

["promptTokensDetails"]=> NULL

["completionTokensDetails"]=> NULL

}

}

Moreover, next to the chat call, others are available, like completions for example. You can find the complete list at https://github.com/openai-php/client

This blog post is licensed under

CC BY-SA 4.0