Fixing "[circuit_breaking_exception] [parent] Data too large, data for [<http_request>]" ELK Stack error

Aug 23, 2021 by Georgi Nikolov | 45186 views

Recently I have encountered an error I wasn’t too familiar with how to resolve, working with the ELK Stack. This specific error is the "[circuit_breaking_exception] [parent] Data too large, data for [<http_request>]". It is not directly visible where the error originates from, but with some sleuthing I discovered that it is caused by Elasticsearch preventing some requests from executing to avoid possible out of memory errors, as detailed in Elasticsearch Circuit Breaker documentation.

This error can come from the monitoring cluster when executing a simple search query. An example of such error log:

{

"error" : {

"root_cause" : [

{

"type" : "circuit_breaking_exception",

"reason" : "[parent] Data too large, data for [<http_request>] would be [`1004465832/957.9mb`], which is larger than the limit of [`986061209/940.3mb`]",

"bytes_wanted" : 1004465832,

"bytes_limit" : 986061209

}

],

"type" : "circuit_breaking_exception",

"reason" : "[parent] Data too large, data for [<http_request>] would be [1004465832/710.9mb], which is larger than the limit of [745517875/710.9mb]",

"bytes_wanted" : 1004465832,

"bytes_limit" : 986061209

},

"status" : 503

}

The same error can be encountered when trying to access the data via Kibana:

[circuit_breaking_exception] [parent] Data too large, data for [<http_request>] would be [1004465832/957.9mb], which is larger than the limit of [986061209/940.3mb], real usage: [1004465832/957.9mb], new bytes reserved: [0/0b], with { bytes_wanted=1004465832 & bytes_limit=986061209 & durability="TRANSIENT" }

Solution

There are different ways of resolving this issue, but the most simple one is to change the Heap size used by Elasticsearch. This can be done by adapting the JVM options of Elasticsearch as detailed in the Elasticsearch documentation.

As detailed in my previous posts on the ELK Stack, I am running the framework using Docker containers and initializing everything using a docker-compose.yml file. Because I am running the ELK Stack for testing purposes, I opted for setting up the ES_JAVA_OPTS variable, instead of adding a custom options file in the form of a jvm.options file. Another important point is that when deploying the ELK Stack for production purposes, it is recommended to set the JVM heap size to 30-32 GB and it should be less than 50% of the total available memory.

To fix my problem, I simply changed the values of the ES_JAVA_OPTS variable in the docker-compose.yml file to increase the available heap size and afterwards restarted the containers. I changed the original java options from:

ES_JAVA_OPTS: "-Xmx256m -Xms256m"

to

ES_JAVA_OPTS: "-Xmx4g -Xms4g"

This resolved the issue right away.

services:

elasticsearch:

build:

context: elasticsearch/

args:

ELK_VERSION: $ELK_VERSION

volumes:

- type: bind

source: ./elasticsearch/config/elasticsearch.yml

target: /usr/share/elasticsearch/config/elasticsearch.yml

read_only: true

- type: volume

source: elasticsearch

target: /usr/share/elasticsearch/data

ports:

- "9200:9200"

- "9300:9300"

environment:

ES_JAVA_OPTS: "-Xmx4g -Xms4g"

ELASTIC_PASSWORD: changeme

# Use single node discovery in order to disable production mode and avoid bootstrap checks.

# see: https://www.elastic.co/guide/en/elasticsearch/reference/current/bootstrap-checks.html

discovery.type: single-node

networks:

- elk

In the case of using a custom options file, we would include this file in the ./elasticsearch/config/jvm.options.d/ directory, which afterwards will be shared with the container via the volume bound to it.

The same can be done to change the JVM heap size used by Logstash by changin the LS_JAVA_OPTS environment variable.

Conclusion

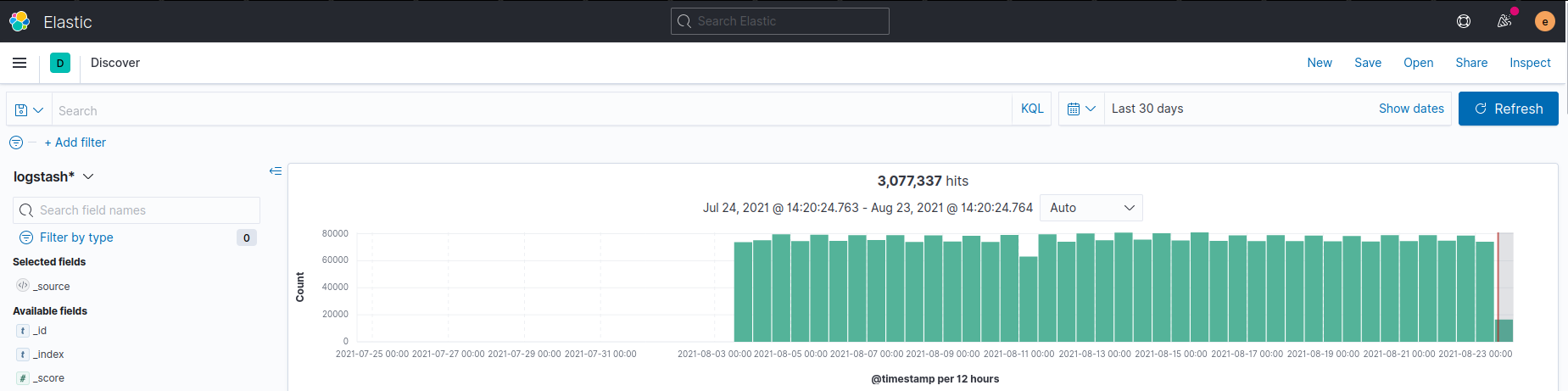

When trying out new technologies we often run into unexpected problems. Luckily the ELK Stack is well documented and their support forums answer a lot of our questions. In my case, it took a bit of research to figure out how to change my configuration to get the stack up and running again. The ELK Stack has since been running for a bit less than a month, with more than 3 million entries added.

This blog post is licensed under

CC BY-SA 4.0